Researchers from two universities in the U.S. recently published a paper examining the Side Eye attack — a way to extract audio data from smartphone video. There’s a rather obscure thing we’d like to clarify here. When you record a video on your phone, naturally, both the image and the accompanying audio are captured. The authors attempted to find out whether sound can be extracted from the image even if, for some reason, the source lacks an actual audio track. Imagine a video recording of a conversation between two businessmen, posted online with the sound pre-cut to preserve the privacy of their discussions. It turns out that it’s possible, albeit with some caveats, to reconstruct speech from such a recording. This is due to a certain feature of the optical image stabilization system integrated into most of the latest generation smartphones.

Optical stabilization and the side-channel attack

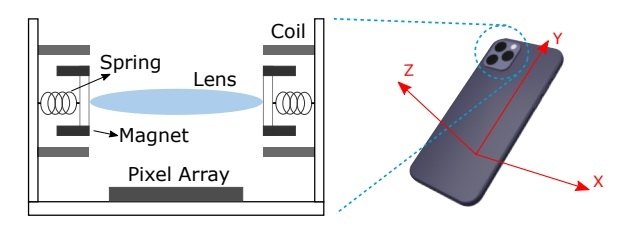

Optical stabilizers provide higher-quality images when shooting videos and photos. They smooth out hand tremors, camera shake while walking, and similar undesirable vibrations. For this stabilization to work, the manufacturers ensure that the camera’s sensor is movable relative to the lens. Sometimes the lenses within the camera itself are also made movable. The general idea of optical stabilization is illustrated in the image below: when motion sensors in a smartphone or camera detect movement, the matrix or lens in the camera moves so that the resulting image remains steady. In this way, most small vibrations don’t affect the final video recording.

Diagram of the optical image stabilization system in modern cameras. Source

Understanding exactly how such stabilization works isn’t necessary. The important thing is that the camera elements are movable relative to each other. They can shift when necessary — aided by miniature components known as actuators. However, they can also be moved by external vibrations —such as those caused by loud sounds.

Imagine your smartphone lying on a table near a speaker, recording a video (without sound!). If the speaker’s loud enough, the table vibrates, and along with it, the phone and these very components of the optical stabilizer. In the recorded video, such vibrations translate into microscopic shaking of the objects captured. During casual viewing, this trembling is completely unnoticeable, but it can be detected through careful analysis of the video data. Another problem arises here: a typical smartphone records video at a rate of 30, 60, or at best 120 frames per second. We only have that much opportunity to capture slight object shifts in the video — and it’s rather little. According to the Nyquist-Shannon sampling theorem, an analog signal (such as sound) of a given frequency can be reconstructed from measurements taken at twice that frequency. By measuring the “shake” of an image at a frequency of 60 hertz, we can at best reconstruct sound vibrations with a frequency of up to 30 hertz. However, human speech lies within the audio range of 300 to 3400 hertz. It’s a mission impossible!

But another feature of any digital camera comes to the rescue: the so-called rolling shutter. Each frame of video is captured not all at once, but line by line — from top to bottom. Consequently, when the last line of the image is “digitized”, fast-moving objects in the frame may already have shifted. This feature is most noticeable when shooting video from the window of a fast-moving train or car. Roadside posts and poles in such a video appear tilted, while in reality they’re perpendicular to the ground. Another classic example is taking a photo or video of a rapidly rotating airplane or helicopter propeller.

The relatively slow reading of data from the camera sensor means that the blades have time to move before the frame is completed. Source

We’ve shown a similar image before — in this post about an interesting method of attacking smart card readers. But how can this rolling shutter help us analyze micro-vibrations in a video? The number of “samples”, meaning the frequency at which we can analyze the image, significantly increases. If video is recorded with a vertical resolution of 1080 pixels, this number needs to be multiplied by the number of frames per second (30, 60, or 120). So we end up being able to measure smartphone camera vibrations with much greater precision — tens of thousands of times per second, which is generally enough to reconstruct sound from the video. This is another example of a side-channel attack: when the exploitation of an object’s non-obvious physical characteristics leads to the leakage of secrets. In this case, the leakage is the sound that the creators of the video tried to conceal.

Difficulties in practical implementation

But… not so fast, tiger. Let’s not assume that, with this complex video signal processing, the authors of the study managed to restore clear and intelligible human speech. The graph on the left shows the original spectrogram of the audio recording, in which a person sequentially says “zero”, “seven”, and “nine”. On the right is the spectrogram of the sound restored from the video recording. Even here, it’s clear that there was significant loss in the restoration of the data. On the project’s website, the authors have provided real recordings of both the original and restored speech. Check out the results to get a clear idea of the shortcomings of this sophisticated eavesdropping method. Yes, some sound can be reconstructed from the video — but it’s more just a kind of weird rattling than human speech. It’s very difficult to guess which numeral the person is uttering. But even such heavily damaged data can be successfully processed using machine learning systems: if you give the algorithm known pairs of original and restored audio recordings to analyze, it can then infer and reconstruct unknown data.

Restoring audio from video using the rolling shutter effect. Source

The success of the algorithm is tested on relatively simple tasks — not on real-life human speech. The results are as follows: in almost 100% of cases, it was possible to correctly determine a person’s gender. In 86% of cases, it was possible to distinguish one speaker from another. In 67% of cases, it was possible to correctly recognize which digit a person was naming. And this is under the most ideal conditions when the phone recording the video was placed 10 centimeters away from the speaker on a glass tabletop. Change the tabletop to wood, and the accuracy starts to decrease. Move the phone farther away — it gets even worse. Lower the volume to the normal level of a regular conversation, and the accuracy drops critically.

Now, let’s move on from theoretical considerations and try to imagine the real-life applications of the proposed scenario. We have to immediately exclude all “eavesdropping” scenarios. If a hypothetical spy with a phone can get close enough to the people having a secret conversation, the spy can easily record the sound with a microphone. What about a scenario where we record the people talking on a surveillance camera from a distance, and the microphone cannot capture the speech? In this case, we likewise won’t be able to reconstruct anything from the video: even when the researchers moved the camera away from the speaker by three meters, the system basically didn’t work (the numerals were correctly recognized in about 30% of cases).

Therefore, the beauty of this study lies simply in finding a new “side channel” of information leakage. Perhaps it will be possible to somehow improve the proposed scheme in the future. The authors’ main discovery is that the image stabilization system in smartphones, which is supposed to eliminate video vibrations, sometimes carefully records them in the final video. Moreover, this trick works on many modern smartphones. It’s enough to train the algorithm on one, and in most cases, it will be able to recognize speech from video recorded on another device.

Anyway, if somehow this “attack” is dramatically improved, then the fact that it analyzes recorded video becomes critical. We can fantasize about a situation in the future where we could download various videos from the internet without sound and find out what the people near the camera were talking about. Here, however, we face two additional problems. It wasn’t for nothing that the authors produced the speech from a speaker placed on the same table as the phone. Analyzing real human speech using this “video eavesdropping” method is much more complicated. Also, phone videos are usually shot handheld, introducing additional vibrations. But, you must agree, this is an elegant attack. It once again demonstrates how complex modern devices are, and that we should avoid making assumptions when it comes to privacy. If you’re being filmed on video, don’t rely on assurances that “they’ll change the audio track later”. After all, besides machine learning algorithms, there’s also the ancient art of lip reading.

side-channel

side-channel

Tips

Tips